This tweet from Paul Graham a while back gave me the perfect excuse to write about one of my favorite topics, the idea of thinking by writing:

Observation suggests that people are switching to using ChatGPT to write things for them with almost indecent haste. Most people hate to write as much as they hate math. Way more than admit it. Within a year the median piece of writing could be by AI.

— Paul Graham (@paulg) May 9, 2023

If we accept the premises that:

- Writing can be a forcing function, a technique, to help clarify thinking, and

- Generative AI simplifies writing raises the floor on prose,

Then it follows that outsourcing writing to AIs necessarily means outsourcing thinking to AIs.

Granted, technology has a bad habit of doing similar things. Less than two decades ago, Google was so useful as a repository for information that we outsourced parts of our memories—tidbits that with the advent of the smartphone, are further relegated to trivia and small talk fodder. Before that, the humble calculator rendered basic arithmetic on pen-and-paper obsolete.

Well, our strategy with the calculator is to first teach math without it, and then introduce the tool over time after kids have learned how to manually add and subtract. We’ve recognized that for the calculator to be useful, its user needs first to understand core mathematical concepts before we make it trivially easy to punch in numbers to calculate. In the ideal use case, calculators streamline repetitive tasks so their users’ mental energies can focus on higher-order problem solving, problems beyond simple arithmetic.

So while the pessimistic case would have AI take over most forms of writing and thus reduce the need for most thinking, the more optimistic take would see AIs take care of the mundane summarizations and rote writing to make space for original thinking. The uncomfortable realization here is that a substantial portion of writing at the college level can be credibly replicated by AI, which underscores the deficiency in promoting critical thinking, even in higher education.

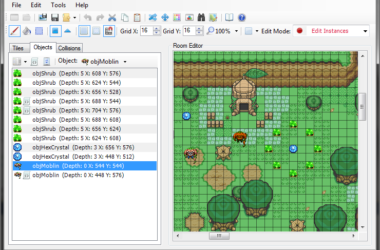

Like many other disruptive technologies before it, AI is weakest when it’s used as a coarse replacement for manual composition and editing. But as a tool to support writers, it can help streamline sentences and summarize text passages. My favorite analogy—contrasting the act of writing with coding—applies equally well here, where a feature like Github Copilot is best used with careful human guidance and verification, and a writing-as-thinking equivalent would require the same kind of manual diligence in checking and integrating AI-generated output.

In practice, something like Grammarly illustrates a great use case. I’ve been running the plugin for a few months for all my blog posts here, as well as my professional work-related communications: namely, emails and formal documentation1. The tool is great for catching grammar typos and redundancies and overused clichés, but I often reject its attempts to rebuild sentences that may be technically correct but strip away the cadence and voice of the author.

As for the thinking bit, AI can play a similar role where machine learning can, and already does, recall facts and remix parts of its training data together. A human can then leverage that work to generate unique, potentially novel insights, conducting precisely the thinking threatened by artificial intelligence. And much like the coding and writing examples, those who can already do what the AI is generating—i.e., as an aide, and not a crutch—stand to benefit the most from streamlining the easy stuff so they can focus on the hard parts that still require a level of human intuition and understanding.

I don’t like AI touching Slack; I find that it overrides too much personality and renders messages bland—and inhuman.↩